Gadgets

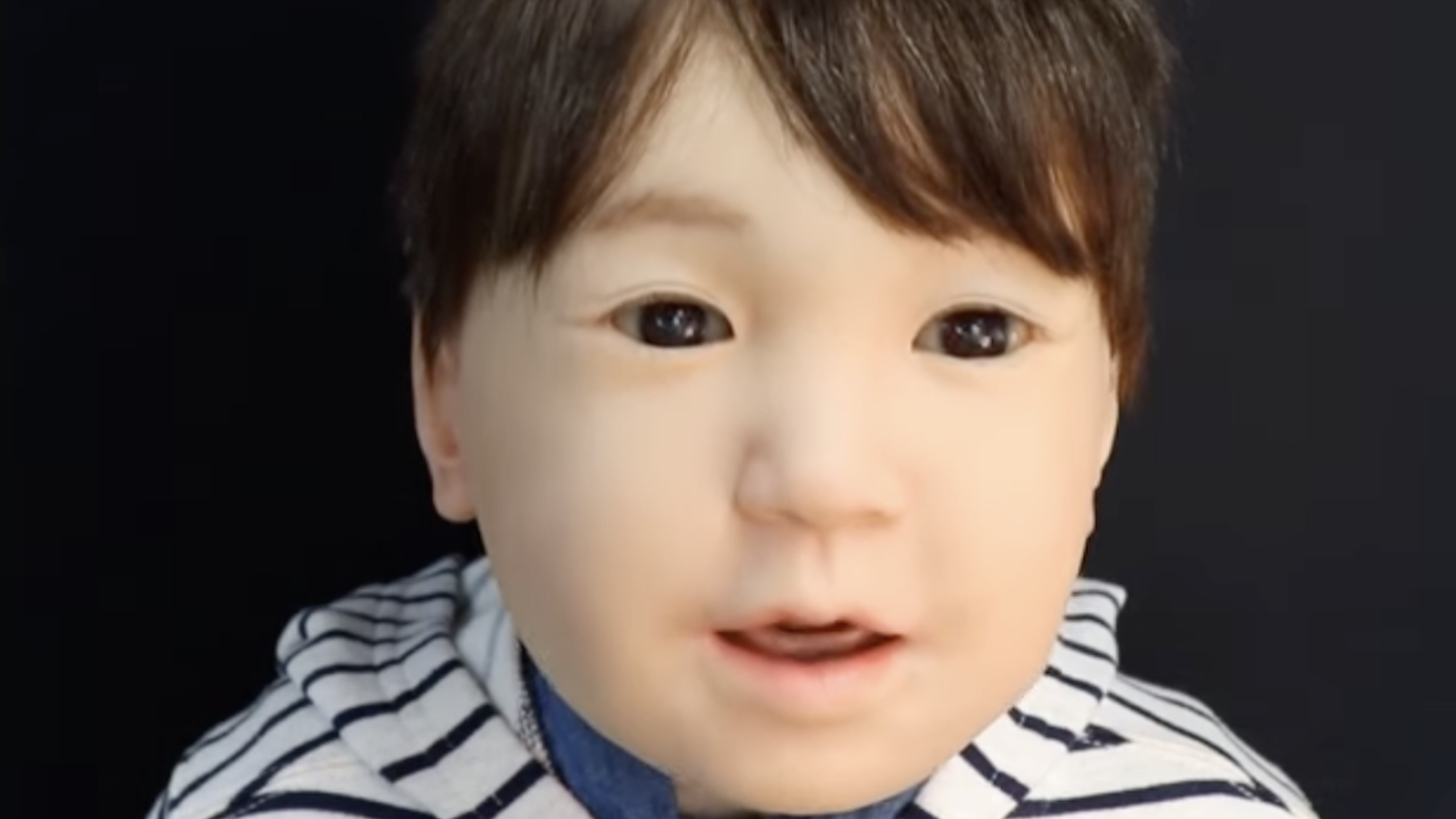

Creepy robot toddler can mimic human expressions

Bipedal robots (at least some of them) are becoming increasingly agile and humanlike in their movements. Despite this, one physical aspect remains stuck in the uncanny valley—realistic facial expressions. Robots still aren’t great at replicating complex and fluid face muscle interactions at speeds comparable to their biological inspirations. One solution, however, may be found by treating expressions as an interplay between various “waveforms.” The result is a new “dynamic arousal expression” system developed by researchers at Osaka University that allows a bot to mimic expressions more quickly and seamlessly than its predecessors.

The potential solution, detailed in a study published in the Journal of Robotics and Mechatronics, requires first classifying various facial gestures like yawning, blinking, and breathing as individual waveforms. These are then linked to amplitude of movements such as opening and closing lips, moving eyebrows, or angling the head. In this first case, a control parameter is used based on a mood spectrum ranging between “sleepy” and “excited.” These waves then propagate and superpose on top of each other to adjust a robot face’s physical features depending on their reaction. According to the study’s accompanying announcement, the new method rids programmers of the need to prepare individualized, choreographed facial movements for each response state.

“The automatic generation of dynamic facial expressions to transmit the internal states of a robot, such as mood, is crucial for communication robots,” the team writes in their study, who add that current methods rely on a “patchwork-like replaying of recorded motions” that make it difficult to achieve realistic results.

For example, a “sleepy” spectrum ranking generates certain results in the robot’s breathing, yawning, and blinking parameters. These subsequently compound on top of one another, further amplifying or minimizing facial movements like mouth size, eyelid flutters, and head tilts. Once calculated, the resulting physical representations are depicted near instantaneously.

“Advancing this research in dynamic facial expression synthesis will enable robots capable of complex facial movements to exhibit more lively expressions and convey mood changes that respond to their surrounding circumstances, including interactions with humans,” said senior author Koichi Osuka.

While arguably a step forward for realistic robots, it’s still hard to shake the spookiness of seeing it displayed on an android child. The facial movements look more fluid and natural than many other contemporary machines, but there’s no way to avoid the fact that the toddler bot’s eyes are still clearly artificial. The fact that its eyes frequently appear to dart side-to-side and drift off-focus doesn’t help matters, either.

Still, treating facial features as an interplay between waves of varying strength and intensity appears to at least offer more realistic results than viewing them as preprogrammed, one-to-one reactions. Regardless, showing off the next iteration using an adult robot could maybe also lessen the overall creepiness.

Please rewrite this sentence.

-

Destination8 months ago

Destination8 months agoSingapore Airlines CEO set to join board of Air India, BA News, BA

-

Breaking News10 months ago

Breaking News10 months agoCroatia to reintroduce compulsory military draft as regional tensions soar

-

Gadgets3 months ago

Gadgets3 months agoSupernatural Season 16 Revival News, Cast, Plot and Release Date

-

Tech News12 months ago

Tech News12 months agoBangladeshi police agents accused of selling citizens’ personal information on Telegram

-

Productivity11 months ago

Productivity11 months agoHow Your Contact Center Can Become A Customer Engagement Center

-

Gadgets3 weeks ago

Gadgets3 weeks agoFallout Season 2 Potential Release Date, Cast, Plot and News

-

Breaking News10 months ago

Breaking News10 months agoBangladesh crisis: Refaat Ahmed sworn in as Bangladesh’s new chief justice

-

Toys12 months ago

Toys12 months ago15 of the Best Trike & Tricycles Mums Recommend